About me

I’m a Research Scientist at the Google Paradigms of Intelligence team. I’m interested primarily in:

- Sequential Decision Making – how to use models such as RNNs, transformers, and state-space models in RL and beyond.

- Multi-agent systems – what systems can drive selfish agents towards cooperation?

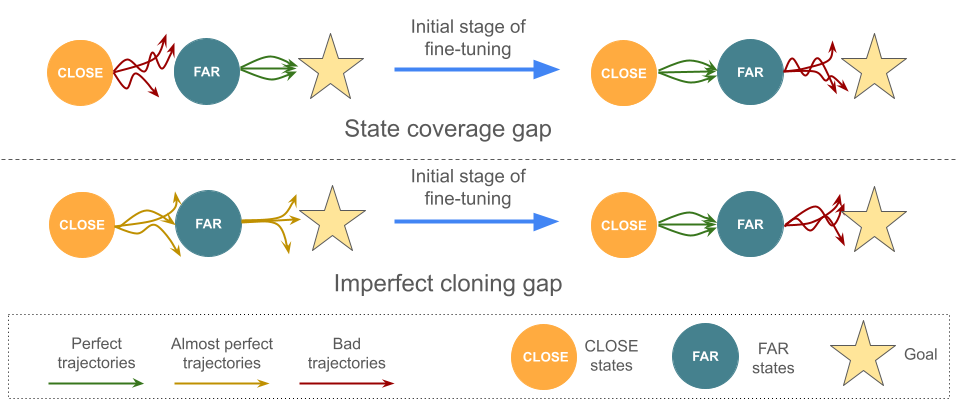

- Fine-tuning RL models – how to harness the power of foundation models in RL by fine-tuning them online.

- Adaptation in foundation models – how to use LLMs/VLMs in completely new decision-making scenarios.

News

- (January 2025) - I joined Google, Paradigms of Intelligence, and moved to Zürich

- (July 2024) - We talked about work on forgetting in RL fine-tuning at UCL Dark seminar. It was a great experience! You can see the recording here.

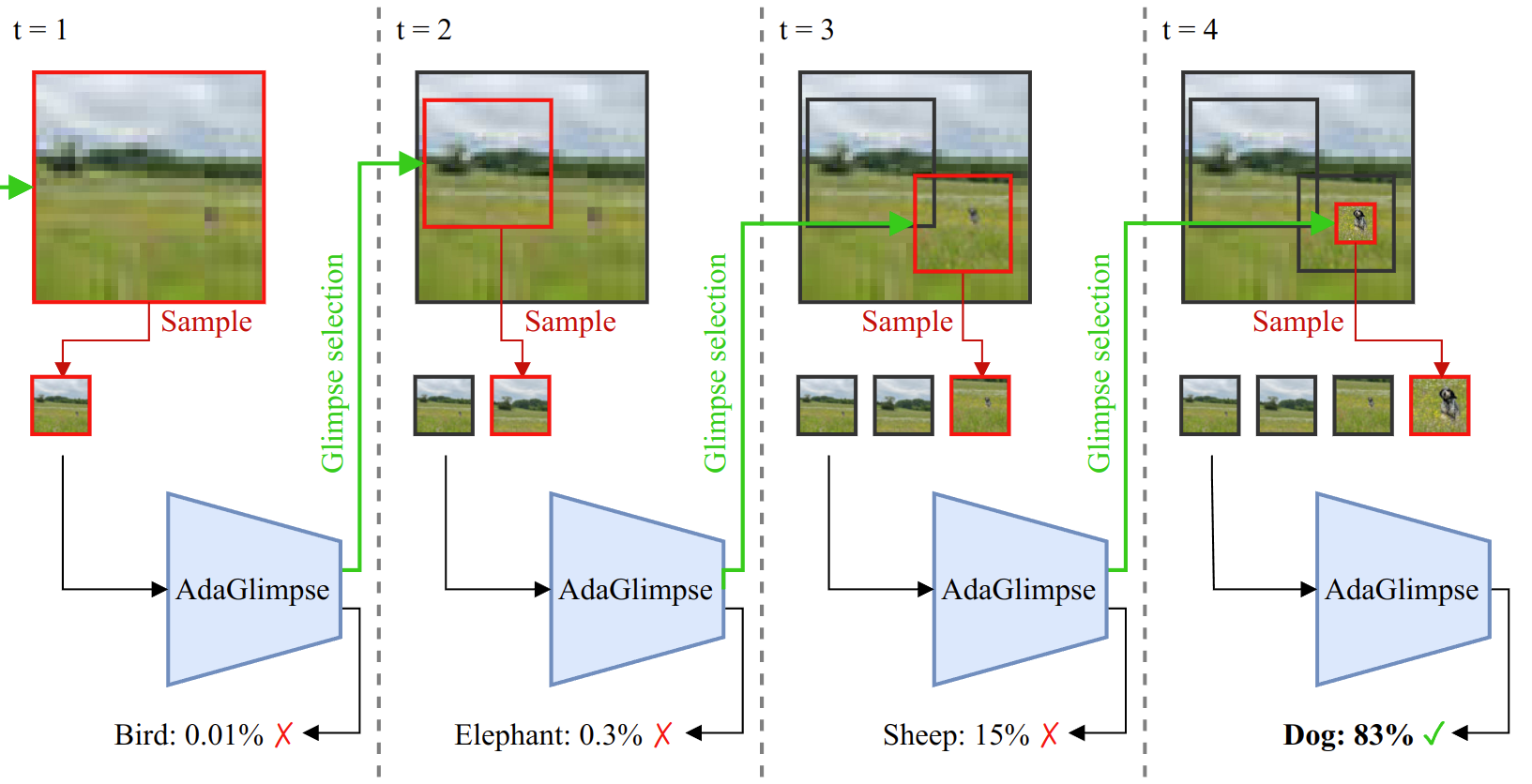

- (July 2024) - Our paper on Adaptive Visual Exploration was accepted at ECCV 2024. See you soon in Milan!

- (June 2024) - Check out our workshop paper on mixing and retrieving states in State-Space Models! To be presented at the Next Generation Sequence Models workshop at ICML 2024.

- (June 2024) - Bartek Cupiał’s and mine NetHack bug-hunting story went viral on Twitter with over 2M views, got featured on ArsTechnica and HackerNews, and even translated into Japanese.

- (May 2024) I’m very proud to have received the FNP Start scholarship for talented young researchers!

- (May 2024) Our paper on the issue of forgetting in RL fine-tuning was accepted as a spotlight at ICML 2024.

- (March 2024) - I’m very proud to co-organize the workshop on the Next Generation of Sequential Modelling Architectures at ICML 2024.

- (February 2024) I defended my PhD thesis with distinction! Huge thanks to my supervisor, prof. Jacek Tabor and everyone who helped me along the way.

- (January 2024) A work on compositional generalization and modularity I helped with was accepted at ICLR 2024. Congrats to the whole team!

- (October 2023) Started work as a postdoc at IDEAS NCBR!

- (January 2023) I had the chance to present my work on continual reinforcement learning at the Warsaw.ai meetup.

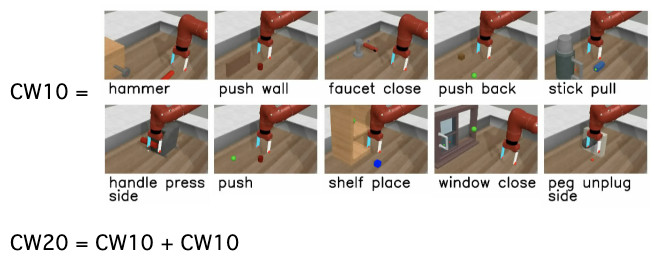

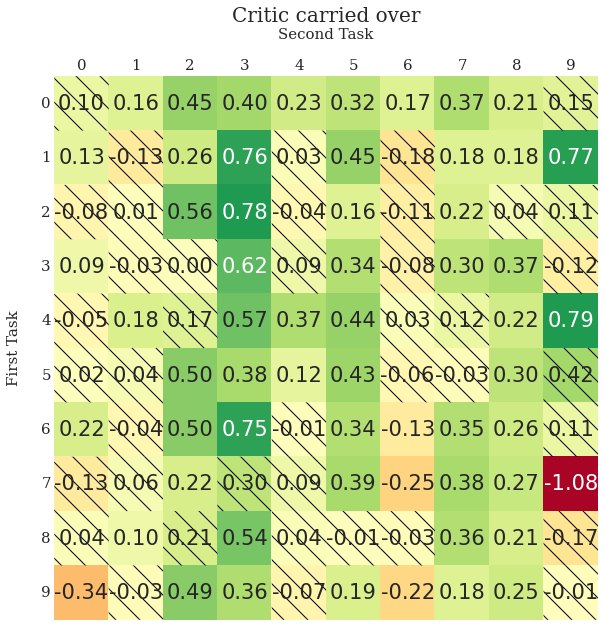

- (December 2022) I presented our work on Disentangling Transfer in Continual Reinforcement Learning at NeurIPS 2022.

- (July 2022) MLSS^N I co-organized was terrific! Many thanks to all lecturers, co-organizers and participants. Check out the lectures!

- (May 2022) Our paper on continual learning with weight interval regularization has been accepted to ICML 2022 as a short presentation.

- (March 2022) Our workshop on Dynamic Neural Networks has been accepted to ICML 2022. See you in Baltimore!

- (March 2022) Started a research internship with João Sacramento at ETH Zurich!

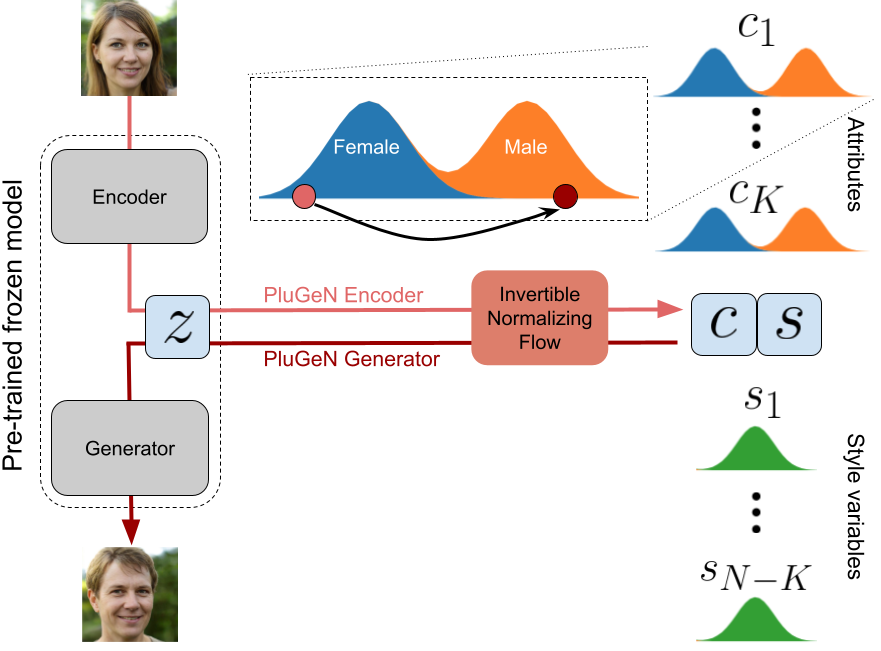

- (December 2021) PluGeN, our paper on introducing supervision to pre-trained, was accepted to AAAI 2022.

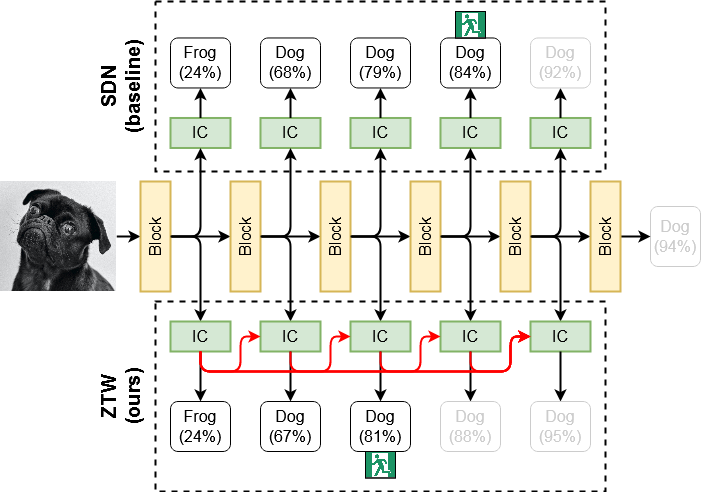

- (September 2021) Two of our papers, Zero Time Waste and Continual World were accepted to the NeurIPS 2021 conference as poster presentations.

- (September 2021) A paper on closed-loop imitation learning for self-driving cars, which I worked on during my internship at Woven Planet, was accepted to CORL 2021 conference.

- (April 2021) Started my internship at Woven Planet Level-5 (previously Lyft Level-5), working on imitation learning for planning in self-driving cars.

Selected publications

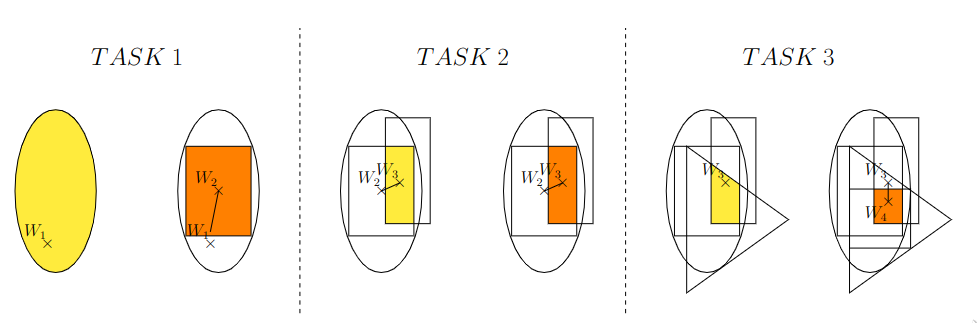

Disentangling Transfer in Continual Reinforcement Learning

Maciej Wołczyk*, Michał Zając*, Razvan Pascanu, Łukasz Kuciński, Piotr Miłoś

NeurIPS 2022

[Paper]

Maciej Wołczyk*, Michał Zając*, Razvan Pascanu, Łukasz Kuciński, Piotr Miłoś

NeurIPS 2022

[Paper]